Our Brain Learns Completely Differently Than Experts Assumed For Nearly A Century

Researchers just overturned a 70-year-old fundamental understanding of how our brains learn – paving the way for faster, more advanced AI applications and a different approach to medical treatments for brain disorders. [This article first appeared on LongevityFacts. Author: Brady Hartman. ]

Researchers just overturned the way scientists thought our brains learn – a view that up until now has been widely accepted for almost 70 years.

This discovery-based upon new experimental evidence – paves the way for more modern artificial intelligence (AI) applications such as machine learning and deep learning algorithms that imitate our brain functions at a much faster speed with advanced features. Moreover, the research may change how doctors view disorders of the brain, such as Alzheimer’s and may alter treatments for other forms of dementia.

Since 1949, a central tenet of neuroscience has been that our brains learn by modifying and strengthening the synapses.

Now, a group of scientists led by Professor Ido Kanter at Bar-Ilan University in Israel has overturned the 70-year-old commonly-held belief that our brains learn only by strengthening the synapses. The team published their results based on experimental evidence in the March 23rd Scientific Reports, part of the highly respected Nature family of scientific journals.

How Our Brain Learns

The human brain is a complex network containing around 90 billion cells called neurons that communicate with each other via connections called synapses. The brain communicates by sending signals that travel through the neuron to the synapses. When the message reaches the end of a neuron, called the synapse, it is converted into a chemical signal called a neurotransmitter. The neurotransmitter that travels across the synaptic gap to the neuron on the other side, which turns the neurotransmitter back into a signal and sends it down the line.

The number of these synaptic connections is mind-boggling, and researchers estimate that neurons interconnect at a hundred trillion to one thousand trillion synapses. Neurons have specialized appendages called dendrites and axons. Dendrites bring information to the center of the neuron and axons take information away from the center. The neuron collects multiple incoming signals through several extremely long arms called dendritic trees that form branches.

Every thought, experience, physical sensation and feeling triggers thousands of neurons, which form a neural network. When we repeat an experience, the brain learns to trigger the same neurons each time, by strengthening the synapses, according to Hebb’s axiom.

Hebb’s Axiom

In 1949 Canadian neuropsychologist Donald Hebb suggested that learning occurs by strengthening the synapses, with neurons functioning as merely the computational elements. Dr. Hebb’s axiom has remained the widely held assumption in the field of neuroscience and gave rise to what is known as Hebb’s axiom,

“neurons that fire together wire together.”

A phrase that Donald Hebb coined in 1949 and had remained a central tenet of neuroscience.

Wiring neurons together to create a neural network is beneficial in that it helps us to store and recall information – and thereby learn – in an efficient way. For example, a neural network helps you to remember the name of a new acquaintance by creating connections. However, the neural network can be stubborn when we try to rewire neurons to respond to a familiar situation in a different way, giving rise to expressions like ‘you can’t teach an old dog new tricks.’

Unseating Hebb’s Axiom

Kanter’s team used theoretical results and experiments on cultures of nerve cells to show that learning is performed by several dendrites, instead of the synapses which were believed to fulfill the role for 70 years. As professor Kanter puts it in a press release accompanying the new study,

“The newly discovered process of learning in the dendrites occurs at a much faster rate than in the old scenario suggesting that learning occurs solely in the synapses. In this new dendritic learning process, there are a few adaptive parameters per neuron, in comparison to thousands of tiny and sensitive ones in the synaptic learning scenario.”

The new learning scenario suggests that learning occurs in a few dendrites that are in much closer proximity to the neuron. As Kanter says,

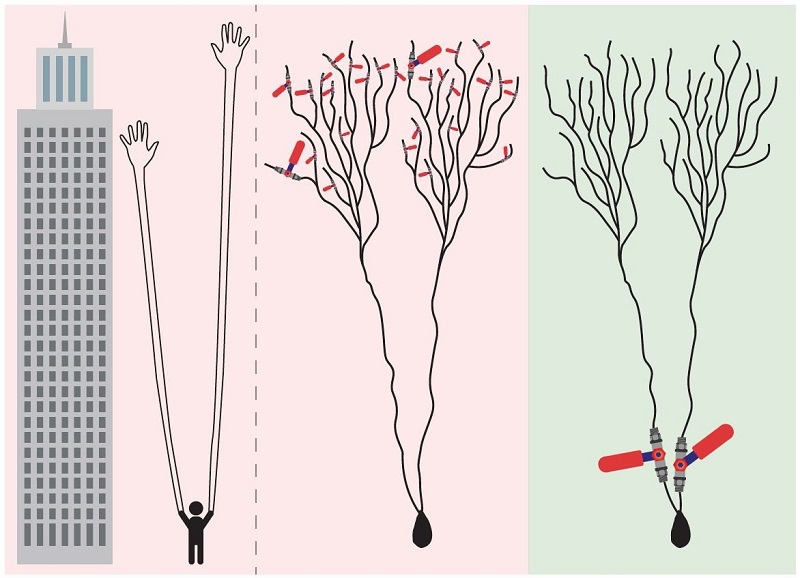

“Does it make sense to measure the quality of air we breathe via many tiny, distant satellite sensors at the elevation of a skyscraper, or by using one or several sensors in close proximity to the nose? Similarly, it is more efficient for the neuron to estimate its incoming signals close to its computational unit, the neuron.”

The New Way Our Brain Learns

Kanter provided the above sketch which compares the old synaptic brain learning scenario shown in pink in the center with the new dendritic learning scenario shown in green. The neuron with two dendritic trees in the center collects incoming signals via thousands of synapses, represented by the red valves. In Kanter’s new dendritic learning scenario shown on the right, only two adjustable red valves are located near the neuron which is the computational element. The scale indicates that if a neuron receiving incoming signals is represented by human fingers, then the neuron’s ‘hands’ or dendritic trees would be as tall as a skyscraper.

What this Discovery Means

Hebb’s axiom has been deeply rooted in the field of neuroscience for 70 years, and few have dared to propose a different explanation. Moreover, dendrites and synapses connect to the neuron in a series, making the exact site of the learning process seem irrelevant.

Kanter’s team also discovered that weak synapses play a newly important role in the dynamics of our brain. Until the new study, neuroscientists had always assumed that weak synapses, even though they comprise the majority of our brain, were insignificant.

In the new study, Kanter’s team found that weak synapses play a more significant role that is postulated by the commonly held synaptic learning scenario.

Because learning occurs in different brain regions, Kanter says his team’s findings call for a reevaluation of current medical treatments for a disordered brain. As Kanter puts it,

“Hence, the popular phrase ‘neurons that fire together wire together,’ summarizing Donald Hebb’s 70-year-old hypothesis, must now be rephrased.”

Moreover, Kanter says that AI developers have studied how the brain learns, using it as a model for artificial intelligence advances such as deep learning and neural networks. The new study, he says, changes the paradigm how the brain learns and paves the way for AI applications that imitate the human mind at a much faster speed with advanced features.

Like this Article?

- Help us spread the word – Click on a social media link to share this article.

- Follow us on social media – Google+ or Reddit

References

Cover Photo: Eoneren / Getty Images.

Disclaimer

Diagnosis, Treatment, and Advice: This article is intended for informational and educational purposes only and is not a substitute for qualified, professional medical advice. The opinions and information stated in this article should not be used during any medical emergency or for the diagnosis or treatment of any medical condition. Consult a qualified and licensed physician for the diagnosis and treatment of any and all medical conditions. Dial 9-1-1, or an equivalent emergency hotline number, for all medical emergencies. As well, consult a licensed, qualified physician before changing your diet, supplement or exercise programs.

Photos, Endorsements, & External Links: This article is not intended to endorse organizations, companies, or their products. Links to external websites, mention or depiction of company names or brands, are intended for illustration only and do not constitute endorsements.